Field Report: Coding in the Age of AI with Cursor

A real Field Report out of the trences of AI-assisted coding with its ups and downs.

This report presents practical methodologies and best practices for developing software using Cursor, an AI-assisted IDE. The paper details a structured workflow that emphasizes specification-driven development, comprehensive documentation practices, and systematic task management to maintain quality control when working with language models. Through detailed examples and rule configurations, it demonstrates how to leverage AI capabilities while mitigating common pitfalls such as redundant code generation and context limitations. The methodology presented includes comprehensive PRD (Product Requirement Document) creation, specification adherence checks, and task breakdown systems that ensure alignment between human intent and AI execution. This guide serves as a practical reference for developers seeking to effectively integrate AI tools into their software development workflow while maintaining project integrity and scalability.

Introduction

In the fast evolving field of AI there is a clear lack of reports on “what really works”. Some techniques hailed as revolution (like the DeepSeek Aha-Moment[1]) for unlimited potential were soon realized to “just” optimize niche problems that can benchmarked[2]1.

1 Like all decent humans i ain’t got time to read up on everything - so a big shoot-out to [3] for doing thorough reviews on ML-topics and linking the respective papers!

2 i.e. the “base model” nearly all papers tested their finding on (qwen-series) also gets better with RLVR-optimization if rewards are random instead of verified

I personally think it is an exercise in futility to get a current theoretical overview for forming a decent grounded opinion on the state of things. Even before one is done analyzing the literature, crossrefercencing and collecting evidence and then finally formulating methods and implementing them, the next revolution comes around that could put everything on its head again. In the afromentioned example the community went from “Reasoning is the solution” in January[1] over first critical views in March[4] to doubts on that claims validity of generating concepts previously not present in the base model in May[5] to complete ad-absurdum in June[2]2.

Therefore see this “Field Guide” for what it is: A current state of things that work for at least 1 Individuum in exactly this ecosystem at this point in time.

How to program with Cursor

In essence Cursor is “just” a fork of Microsofts VSCode with some added functionality: Automatically injecting files into LLM-Prompts, offering tool-aware LLMs to use MCPs, read the filesystem, execute arbitrary commands in the shell (either automatically or after permission), getting feedback from the editor (i.e. installed linters, language-servers etc.) and thus have the same (or even better) information/tools available as the programmer in front of the screen.

Capabilities / General procedure

The main issue is now: theoretically agentic IDEs can get all information - practically it is limited directly by token-window sizes, costs of these queries; and indirectly by outsourced costs like environmental impacts, data-security, etc. The suppliers of such services can claim privacy as much as they want - it can’t be proven and (especially under US-Law) is not even possible to resist lawful orders (including the gag-order to not talk about these).

In practise one feels the direct pain points more severely. Some regular examples include generating redundant code, because the current context was not aware of utility-modules and functions it could use - leading to huge technical debt in no time.

Therefore my preferred workflow is to “think bigger”. Imagine being a product owner of a huge, sluggish company. The left hand never knows what the right hand does or has done (i.e. the LLM forgetting things already exist in the codebase), everything has to be rigorous defined, specified and enforced. Some people reported good results with Test-Driven-Development (TDD) - but in my experience these things only prevent regressions and not proactively enforce the desired agent behaviour.

Lessons from Project Management

This may be a duh!-Moment for people longer in Software-Engineering, but most of the time is getting the specifications of what you want to build right. Asking questions. Interviewing stakeholders. Gathering user experience reports. Most of it is not actually writing code - but deciding on what to write and how.

For this i created some rules in my workflow that interleave with their responsibilities and outcomes. Especially in the planning phase the LLM is encouraged to ask questions, find edge-cases or even look at other implementations. One could also think of letting the agent do a websearch, read reports and forums on how good which framework works and then arguments on why this framework is good - and also why it is not good. The last decision of all pro/contra is by the actual human.

The main theme always follows a similar pattern:

- A need is found.

This could be a bug, a feature, some changes to existing behaviour etc. - An investigation is launched, yielding a Product Requirement Document (PRD).

This dives into the codebase to asses the current state of things. Maybe some bugs are obvious and easily fixed.

This formalizes that the LLM understood what should be done and especially what is out of scope. - Pin the desired behaviour in a Specification.

Either this means changing currently established specifications (i.e. bug/change) or writing complete new ones (i.e. feature). - Investigate Spec-Compliance.

Again the agent looks at the codebase to identify where things should change and how. Also recommendation are made on how it could achieve the goal. - Generate Tasks.

From the compliance-report of spec-deviations (either from a bug or from a new/changed spec) finally a Plan to fix everything can be derived (think: Sprint-Planning). - NOTE: Up to here the agent never touched the code.

- Finally Implement the change.

This is most often the most trivial step. Everything is known and formulated for “simple” Agents to just follow. It names files needed, specs to follow, guidelines on how to do things in the repository etc.

Implementation

I only go briefly over the most important aspects of the implementation in Rules and their results. As this is also evolving with experience, there will be updates to those on the published place.

The Rules

Cursor rules are written in markdown with a small yaml-frontmatter. description is a string providing the agent with a description of the rule so it can be called like a tool (and basically injects the rule-content into the context). globs automatically inject the rule when the glob matches one attached filename. alwaysApply injects the rule into all contexts-windows.

Always apply rule: Basic info and behaviour

This tells the agent the project-structure and fixes some common pitfalls (i.e. the agent assuming bash, getting sidetracked, etc.).

---

description:

globs:

alwaysApply: true

---

# IMPORTANT INFORMATION! DO NOT FORGET!

## Conventions

- Run tests with `poetry run pytest`.

- **NO BASH IDIOMS IN COMMANDS**. Do not assume bash-usage. Use temporary

scripts instead of bash-idoms like `<<` for text-input to a process.

- Ask to update corresponding spec when you detect or implement a behaviour

change.

- Mention every time things are not implemented according to spec and offer to

make them compliant.

## Behavior of the Agent

- If you have a task, ONLY do the task. Do not get distracted by failing tests,

missing data etc. not related to your task.

- Spec compliance is key. Check corresponding specs before you fix behaviour or

ask the user if you should proceed.

## Where to look for information?

- Status & changes: `git status`, test failures in `tests/`

- Active feature work: `/tasks/<feature>/TASKS.md`

- Feature requirements: `/tasks/<feature>/PRD.md`

- Feature specifications: `/specs/`

- Source code: `/src/`

- Tests & fixtures: `/tests/`

- CLI entry point: `/src/<project>/cli/__main__.py`Get the PRD

---

description:

globs:

alwaysApply: false

---

## Product Requirements Document (PRD)

### Purpose

Draft a concise, implementation‑ready Product Requirements Document (PRD) from a

one‑sentence feature description plus any additional Q&A with the stakeholder.

### Output

- Create /tasks/<feature>/PRD.md

- Markdown only – no prose, no code‑fences.

- File structure:

> # <Feature title>

>

> ## 1. Problem / Motivation

>

> ## 2. Goals

>

> ## 3. Non‑Goals

>

> ## 4. Target Users & Personas

>

> ## 5. User Stories (Gherkin “Given/When/Then”)

>

> ## 6. Acceptance Criteria

>

> ## 7. Technical Notes / Dependencies

>

> ## 8. Open Questions

### Process

1. Stakeholder provides a single‑sentence feature idea and invokes this rule.

2. Look at specifications in `specs/` and inspect the code if needed to get an

idea what the Stakeholder expects from this feature.

3. Ask up to five clarifying questions (Q1 … Q5). If anything is still vague

after five, look into the project with the new information provided. You may

ask for further clarification up to 3 times following this schema, else flag

it in _Open Questions_.

4. After questions are answered reply exactly: Ready to generate the PRD.

5. On a user message that contains only the word "go" (case‑insensitive):

- Generate /tasks/<feature>/PRD.md following _Output_ spec.

- Reply: <feature>/PRD.md created – review it.

6. STOP. Do **not** generate tasks or code in this rule.

### Writing guidelines

- Keep each bullet ≤120 characters.

- Use action verbs and measurable language.

- Leave TBDs only in _Open Questions_.

- No business fluff – pretend the reader is a junior developer.

### Safety rails

- Assume all work happens in a non‑production environment, unless otherwise

stated or requested by you.

- Do not include sensitive data or credentials in the PRD.

- Check the generated Document with `markdownlint` (if available), apply

auto-fixes and fix the remaining issues manually.A call to this rule usually looks like @generate-prd We noticed, that …. Therefore investigate the codebase to come up with a PRD addressing these issues..

Specifications

---

description: Specification Writing Guidelines

globs:

alwaysApply: false

---

# Specification Writing Guidelines

## Overview

This rule provides guidelines for writing and maintaining specifications in

[specs/](mdc:specs) to ensure consistency, clarity, and prevent implementation

discrepancies.

## Specification Structure

### Required Sections

Every specification should include:

1. **Title and Purpose**

```markdown

# Specification: [Component Name]

Brief description of what this specification covers and its purpose.

```

2. **Scope and Boundaries**

- What is included/excluded

- Dependencies on other specifications

- Relationship to other components

3. **Detailed Requirements**

- Structured by logical sections

- Clear, unambiguous language

- Examples where helpful

4. **Error Handling**

- How errors should be handled

- Fallback behaviors

- Edge cases

5. **Testing Requirements**

- Expected test coverage

- Snapshot requirements

- Approval test criteria

## Writing Standards

### Clarity and Precision

- **Use specific language**: Avoid vague terms like "should" or "might"

- **Provide examples**: Include concrete examples for complex requirements

- **Define terms**: Clearly define any technical terms or concepts

- **Use consistent formatting**: Follow established patterns from existing specs

### Structure and Organization

- **Logical flow**: Organize sections in logical order

- **Consistent headings**: Use consistent heading levels and naming

- **Cross-references**: Link to related specifications using

`[spec_name](mdc:specs/spec_name.md)`

- **Code blocks**: Use appropriate language tags for code examples

### Completeness

- **Cover all cases**: Address normal, error, and edge cases

- **Be exhaustive**: Don't assume implementation details

- **Consider interactions**: How this spec relates to others

- **Future-proof**: Consider potential changes and extensions

## Specification Maintenance

### Version Control

- **Update specs first**: When changing behavior, update spec before

implementation

- **Document changes**: Use clear commit messages explaining spec changes

- **Review process**: Have specs reviewed before implementation

### Consistency Checks

- **Cross-reference validation**: Ensure all links to other specs are valid

- **Terminology consistency**: Use consistent terms across all specs

- **Format consistency**: Follow established formatting patterns

### Testing Integration

- **Spec-driven tests**: Write tests based on specification requirements

- **Snapshot validation**: Ensure snapshots match specification exactly

- **Approval tests**: Use approval tests to catch spec violations

## Quality Checklist

### Before Finalizing Specification

- [ ] All requirements clearly stated

- [ ] Examples provided for complex requirements

- [ ] Error cases covered

- [ ] Cross-references to other specs included

- [ ] Out of scope items clearly defined

- [ ] Testing requirements specified

- [ ] Consistent formatting throughout

- [ ] Check the generated Document with `markdownlint` (if available), apply

auto-fixes and fix the remaining issues manually.

### Review Criteria

- [ ] Is the specification unambiguous?

- [ ] Are all edge cases covered?

- [ ] Does it integrate well with other specs?

- [ ] Is it testable?

- [ ] Is it maintainable?

## Common Pitfalls to Avoid

### Ambiguity

- **Vague language**: "The system should handle errors gracefully"

- **Missing details**: Not specifying exact error handling behavior

- **Unclear relationships**: Not explaining how components interact

### Inconsistency

- **Different terms**: Using different terms for the same concept

- **Inconsistent formatting**: Not following established patterns

- **Conflicting requirements**: Requirements that contradict other specs

### Incompleteness

- **Missing edge cases**: Not considering unusual scenarios

- **Incomplete examples**: Examples that don't cover all cases

- **Missing error handling**: Not specifying what happens when things go wrong

## Related Rules

- [spec-compliance-investigation.mdc](mdc:.cursor/rules/spec-compliance-investigation.mdc)

How to investigate spec-implementation discrepancies

- [base_overview.mdc](mdc:.cursor/rules/base_overview.mdc) Project structure and

conventionsAs it is obvious this is a very intricate rule with many criteria. For this you really need a reasoning and deep-thinking model that can also reason for extended times (many minutes are normal!) and call tools every now and then to get even more information. Models like o3, deepseek-r1 and the opus-series of claude really shine here.

Spec Compliance

---

description: Spec Compliance Investigation Guide

globs:

alwaysApply: false

---

# Spec Compliance Investigation Guide

## Overview

This rule provides a systematic approach for investigating discrepancies between

specifications and implementations, following the pattern established. Do not

change any code during this phase.

## Investigation Process

### 1. Initial Analysis

- **Locate specification**: Find the relevant spec file in [specs/](mdc:specs)

- **Identify implementation**: Find corresponding source code in [src/](mdc:src)

- **Check tests**: Review test files in [tests/](mdc:tests) for expected

behavior

- **Run tests**: Execute `poetry run pytest` to identify current failures

### 2. Systematic Comparison

For each specification section:

1. **Extract requirements** from spec file

2. **Examine implementation** in source code

3. **Compare outputs** with test snapshots

4. **Document discrepancies** with specific examples

### 3. Documentation Structure

Create analysis document in [tmp/spec\_[component]\_discrepancies.md](mdc:tmp/)

with:

```markdown

# [Component] Specification vs Implementation Discrepancies

## Executive Summary

Brief overview of findings and impact

## Key Discrepancies Found

### 1. [Category] - [Specific Issue]

**Specification:**

- Requirement details

**Implementation:**

- Current behavior

- ✅ Correct aspects

- ❌ Incorrect aspects

## Test Results

- Current test failures

- Output differences

## Impact Assessment

### High Impact Issues:

- Critical functionality problems

- User experience issues

### Medium Impact Issues:

- Consistency problems

- Formatting issues

### Low Impact Issues:

- Minor differences

- Style variations

## Recommendations

### Option 1: Update Spec to Follow Code

**What to change:**

- Specific spec modifications

**Pros:**

- Benefits of this approach

**Cons:**

- Drawbacks of this approach

### Option 2: Update Code to Follow Spec

**What to change:**

- Specific code modifications

**Pros:**

- Benefits of this approach

**Cons:**

- Drawbacks of this approach

### Option 3: Recommended Hybrid Approach

**Recommended Solution:**

- Phased implementation plan

**Rationale:**

- Why this approach is best

**Implementation Priority:**

- Immediate, short-term, medium-term tasks

```

## Quality Checklist

### Before Finalizing Investigation

- [ ] All specification sections reviewed

- [ ] Implementation code thoroughly examined

- [ ] Tests run and failures documented

- [ ] Impact assessment completed

- [ ] All three solution options evaluated

- [ ] Recommendation justified with rationale

- [ ] Implementation plan prioritized

### Documentation Quality

- [ ] Specific examples provided for each discrepancy

- [ ] Code snippets included where relevant

- [ ] Pros/cons clearly articulated

- [ ] Implementation steps detailed

- [ ] Priority levels assigned

## Related Rules

- [Base Project Overview](mdc:.cursor/rules/base-project-overview.mdc) Project

structure and conventions

- [Spec Guidelines](mdc:.cursor/rules/spec-guidelines.mdc) How to write

specificationsThis compliance-report also need a deep-thinking model, like the specification beforehand.

Tasks

---

description:

globs:

alwaysApply: false

---

## Task List creation

### Purpose

Translate a Product Requirements Document (PRD) into an executable Markdown task

list that a junior developer (human or AI) can follow without extra context.

### Output

- Create /tasks/<feature>/TASKS.md (overwrite if it exists).

- Markdown only, no prose around it.

- Epics = H2 headings (`## 1. <Epic>`).

- Tasks = unchecked check‑boxes (`- [ ] 1.1 <task>`).

- Sub‑tasks = indent one space under their parent (` - [ ] 1.1.1 <subtask>`).

- Create a /tasks/<feature>/Task*<Epic>*<task>\_<subtask>.md (i.e.

`Task_3_2_4.md` for Epic 3, Task 2, Subtask 4)

### Process

1. Read the tagged PRD.

2. **Investigate** the current state of the repository to collect answers to

your first questions. All specs for fixed behaviours and outputs are located

in `specs/`. **Consult those** as a source first before trying to

reverse-engineer from the code.

If specs are in need of change then this is also a task to be generated.

3. If critical info is missing and cannot be answered by looking at the code,

ask max five clarifying questions (Q1 … Q5) and stop until answered.

4. After questions are answered think about the answers and: Either: look at the

code again, then goto 3., and ask for further clarification Or: Reply

exactly: Ready to generate the subtasks – respond **go** to proceed.

5. On a user message that contains only the word "go" (case‑insensitive): a.

Generate /tasks/<feature>/TASKS.md following _Output_ spec. b. Reply with:

TASKS.md created – review them.

6. After TASKS.md was reviewed, create `Task_<e>_<t>_<s>.md` for each task and

subtask containing implementation hints like relevant specs (link them!),

primary files to edit/review for this task, tests needing change, etc.

7. Stop. Do **not** begin executing tasks in this rule.

### Writing guidelines

- Each item ≤120 characters, start with an action verb.

- Hints are allowed below each item as HTML-Comment and do not count against the

120 characters.

- Group related work into logical epics with ≤7 direct child items.

- Prefer concrete file paths, commands, specs or APIs when available.

- Skip implementation details obvious from the codebase in the overview.

- If a task only concerns up to 5 files, name them in the detailed file.

Otherwise give hints on how to search for them (i.e. "everything under

`src/models/`").

### Safety rails

- Never touch production data.

- Assume all work happens in a feature branch, never commit directly to main.

- Check the generated Document with `markdownlint` (if available), apply

auto-fixes and fix the remaining issues manually.This also works better with one of those deep-thinking models.

Other Rules

I have some other rules with guidelines on how to write proper tests, one for “just follow the tasks in TASKS.md one by one until done with commit after each task”, etc. Those are omitted for brevity. Also they are so simple, that non-reasoning-models can follow them. Cheap claude3-sonnet or small, specialised coding-LLMs are enough to get the job done with this preparation.

Example: Rules in Action

The codebase we look at here is a project called gitlab_overviewer. It takes GitLab-api-keys and generates nice overviews for tracking metadata in different projects across different groups. With a nice export to markdown (for rendering in GitLab itself) and quarto (for exporting to i.e. confluence) with multiple pages etc. pp.

The current issue is, that due to a complete rewrite we are happy with the current output, but there are some minor problems still remaining. Some of them may also due to specs being incorrect, because requirements or ideas changed over time.

Analyzing the situation

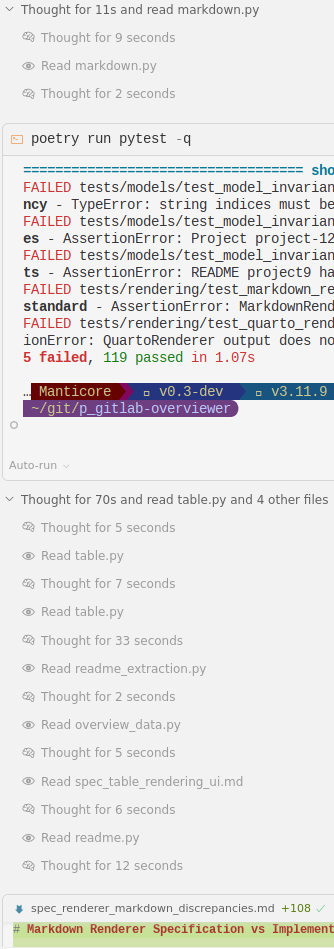

For the analysis o3 was used and it was sufficient on first try. Here is (part of) the conversation:

@spec-compliance-investigation.mdc do a spec-compliance investigation on @spec_renderer_markdown.md . The relevant modules in the @/src should link to the spec (omissions are possible and also out-of-spec).

tmp/spec_renderer_markdown_discrepancies.md:

Markdown Renderer Specification vs Implementation Discrepancies

Executive Summary

The current

render_grouped_markdownimplementation provides most of the layout and structural requirements defined inspecs/spec_renderer_markdown.md. However, several key aspects diverge from the specification, leading to failing approval tests and user-visible issues. The most critical problems concern ordering guarantees and the content chosen for the Description callout.Key Discrepancies Found

1. Ordering – Projects inside detailed sections

Specification:

- Projects in the detailed part “appear in the same order they had inside their group’s summary table” (Spec §4, bullet 3).

Implementation:

# ... excerpt ... for od in sorted(group_projects, key=lambda p: p.project.name.lower()): # renders project sections alphabetically

- Projects are sorted alphabetically, not in the original summary-table order.

- ✅ Groups themselves are ordered by descending project count (matches spec).

- ❌ Project order within groups breaks the “mirror the incoming, already-sorted data” rule.

2. Description callout picks raw front-matter if no

descriptionkey existsSpecification:

- If front-matter contains a

descriptionkey → use its value (callout-typetip).- Else if

Readme.contentis not empty → use that content (callout-typewarning).Readme.contentis defined as the first real paragraph without front-matter (see Model spec).Implementation:

- Correctly checks for

.raw_frontmatter["description"]first ⬆️.- Then falls back to

od.readme.contentunconditionally. In practice many fixture READMEs still include--- …YAML because the pre-processing step did not strip it, so the callout now contains the entire front-matter block – cf. failing snapshot diff lines starting with> --- "author":.- ✅ Callout types (

tip/warning/danger) are chosen per spec.- ❌ Content for the warning variant violates the “no raw front-matter” expectation and visual quality.

8< ……… 8<

Test Results

poetry run pytest -q → 5 failures / 119 passed • tests/rendering/test_markdown_renderer_approval.py (output mismatch) • tests/rendering/test_quarto_renderer_approval.py (cascade – shares ordering logic) • tests/models/test_model_invariants.py (3 failures unrelated to renderer)The Markdown approval diff highlights exactly the two major discrepancies above (alphabetical ordering & raw front-matter in callout).

Impact Assessment

High Impact Issues

- Incorrect project ordering – breaks reproducibility guarantees and user expectations; also propagates to Quarto renderer.

- Raw YAML in description callout – severely harms readability of generated documentation.

Medium Impact Issues

- None identified besides cosmetic phrasing.

Low Impact Issues

- Minor stylistic deviations (e.g., spacing) appear compliant within tolerance.

Recommendations

Option 1: Update Spec to Follow Code

What to change:

- Relax ordering requirement to alphabetical order.

- Allow raw front-matter YAML in description callout.

Pros: No code changes; quick test green.

Cons: Violates original design intent; exposes low-level data to end-users; poor UX.

Option 2: Update Code to Follow Spec (preferred)

What to change:

- Remove the

sorted(...)call to preserve input ordering.- Ensure

Readme.contenttruly excludes YAML front-matter (useparse_readmeduring data ingestion or strip before rendering).Pros: Restores spec fidelity; fixes approval tests; better documentation quality.

Cons: Requires code edit & possibly data-loading adjustments.

Option 3: Hybrid Approach

- Fix ordering in renderer.

- Keep current fallback but truncate front-matter to first paragraph until improved extraction available.

Recommended Solution: Option 2 – small, well-scoped patches bring implementation back in line with spec and tests.

Implementation Priority:

- Immediate: ordering fix & front-matter stripping.

- Short-term: add regression tests for ordering.

- Medium-term: audit other renderers (Quarto) for same issues.

I have completed the investigation phase and produced a detailed report at

tmp/spec_renderer_markdown_discrepancies.mdKey take-aways:

• Two core deviations from the Markdown-renderer spec were found

- Project ordering in the detailed section is alphabetical instead of mirroring the summary order.

- When a README lacks a

description:key, the Description call-out falls back to raw front-matter, so YAML blocks are rendered verbatim.

• These issues are the root cause of the failing approval tests.

• A concise impact assessment and three solution options are documented; the recommended path is to fix the code to match the specification.

Let me know if you’d like to proceed with the recommended fixes or explore the alternate options.

Correcting the analysis

By reading the analysis i soon noticed, that things were missing out of the spec. So i added a bit of text:

1. Ordering – Projects inside detailed sections

Specification: > […snip…]

- ❌ Project order within groups breaks the “mirror the incoming, already-sorted data” rule.

Comment:

- The implemented behaviour is using alphabetical order in the detailed section and in the table according to the sort defined in the

@spec_table_config. The spec needs updating.

2. Description callout picks raw front-matter if no description key exists

Specification: > […snip…]

- ❌ Content for the warning variant violates the “no raw front-matter” expectation and visual quality.

Comment:

- This is a violation of spec. But additionally it should only render the first 10 lines of

Readme.contentand then add a…continues…if there are more lines.

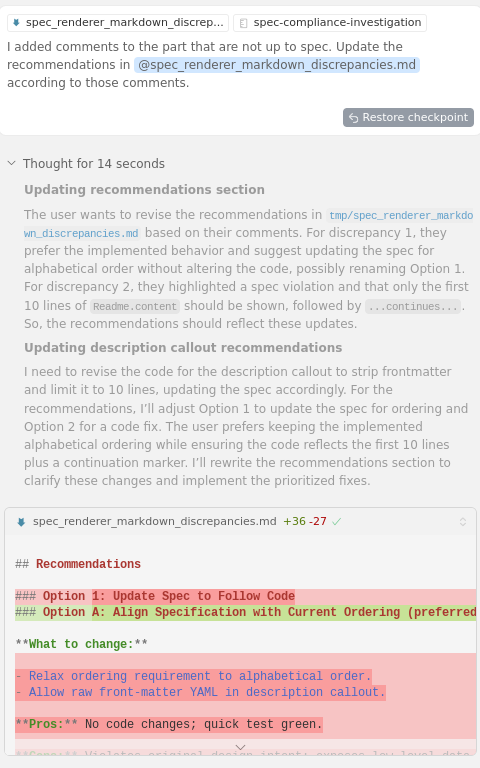

Armed with those correction i told the LLM to generate better recommendations, that align with my goals. The LLM then deleted the whole recommendations-section and wrote exactly what i wanted. Again o3 was used for the slow and deep thinking.

I added comments to the part that are not up to spec. Update the recommendations in @spec_renderer_markdown_discrepancies.md according to those comments.

3 yaya .. i know. Antropomorphing an AI.

tmp/spec_renderer_markdown_discrepancies.md:

// … existing code …

Recommendations

Option A: Align Specification with Current Ordering (preferred)

What to change:

- Amend

spec_renderer_markdown.md§4 to state that projects in the detailed section appear alphabetically by project name. This legitimises the current implementation and removes the ordering-related approval failure.- Keep the existing implementation for project ordering unchanged.

- Change the renderer implementation for the Description call-out:

- Strip YAML front-matter from

Readme.content(useparse_readme).- Render at most the first 10 non-empty lines of the resulting text.

- If more lines remain, append a marker

…continues…as the final line inside the call-out.- Add corresponding wording to the spec clarifying the 10-line truncation rule.

Pros:

- Least intrusive to working behaviour users already rely on.

- Fixes readability issue without reordering content.

- Smaller diff in test fixtures (only call-out content).

Cons:

- Requires a spec update (but that is a documentation-only task).

Option B: Keep Original Spec Ordering, Change Code

What to change:

- Remove

sorted()so project order mirrors summary-table order.- Apply the same Description call-out improvements as in Option A.

Pros:

- Upholds original design intent of summary/detailed symmetry.

Cons:

- Generates a larger diff for existing artefacts; may surprise current users.

Recommended Solution

Proceed with Option A:

- Update the specification paragraph on ordering.

- Implement the 10-line truncation +

…continues…logic in the renderer.Implementation Priority

- Immediate: implement call-out truncation logic & adjust approval snapshots.

- Immediate: update Markdown renderer spec for alphabetical ordering and call-out truncation.

- Short-term: add regression tests for the new truncation rule.

- Medium-term: propagate the truncation rule to the Quarto renderer to keep output parity. // … existing code …

The “Recommendations” section has been rewritten to reflect your comments:

1. Ordering: keep the current alphabetical behaviour and adjust the spec instead.

2. Description call-out: implement a 10-line preview with a “…continues…” marker after stripping front-matter.

These points are now captured as Option A (preferred), with a revised implementation roadmap.

Let me know when you’d like me to start on the code/spec changes.

Creating Tasks

Now that we have a clear plan without touching any code, lets flesh out the Details.

@generate-tasks.mdc generate Tasks for Option A of @spec_renderer_markdown_discrepancies.md under a feature called “markdown_rendering”.

TASKS.md:

1. Spec updates

2. Markdown renderer implementation

3. Quarto renderer alignment

4. Tests & snapshots

5. Documentation & housekeeping

Task_1_1.md:

Task 1.1 – Amend alphabetical ordering rule in spec_renderer_markdown.md

Relevant spec:

specs/spec_renderer_markdown.md(§4 Ordering Guarantees)Goal: Replace the bullet “projects appear in the same order they had inside their group’s summary table” with “projects appear alphabetically by their name”.

Primary files:

specs/spec_renderer_markdown.mdHints:

- Search for the existing ordering clause (§4 bullet 3) and update accordingly.

- Run

markdownlintafter editing:npx markdownlint-cli2 specs/spec_renderer_markdown.md --fix.

Task_1_2.md:

Task 1.2 – Document 10-line truncation rule for Description call-out

Relevant spec:

specs/spec_renderer_markdown.md(§3 Project Detail Sections)Goal: Add a subsection clarifying that when the Description call-out uses

Readme.content, the renderer must:

- Strip YAML front-matter.

- Render the first 10 non-empty lines.

- Append

…continues…if more content was omitted.Primary files:

specs/spec_renderer_markdown.mdHints:

- Place this clarification right after rule 3.1 in §3.

- Provide a small Markdown example block.

- Remember to update any cross-links.

[…further tasks omitted for brevity…]

Executing

With all that detailed instructions changes to the code are narrowly focused and the changes are easily reviewable. Execution does not need any deep thinking and can be done by a fast and cheap code-model like gemini-2.5, claude3-sonnet, etc.

They usually just loads all relevant files as context, work step-by-step, verify, tick off the task and report back for review.

@xxx-work-on-tasks.mdc @/markdown_rendering Implement all Task1\*.md.

I’ll help you implement the tasks from Task1*.md in the markdown rendering feature. Let me first check what these tasks entail.

Let me first check the current state of the spec file we need to modify.

I’ll now implement both tasks. Let’s start with Task 1.1 - amending the alphabetical ordering rule.

// ... existing code ...

## 4. Ordering Guarantees

- Groups are ordered by the number of Projects rendered (alphabetically on a

tie).

- Summary project order mirror the incoming, already-sorted data (see

[Table Sorting](./spec_table_sorting.md)).

- In the detailed part, projects appear alphabetically by their name.

// ... existing code ...Now let’s implement Task 1.2 - documenting the 10-line truncation rule for the Description call-out.

// ... existing code ...

Missing entries from the Project-Info are omitted.

When using `Readme.content` in a Description call-out, the renderer must:

```markdown

1. Strip any YAML front-matter from the content

2. Include only the first 10 non-empty lines

3. If content was truncated, append "…continues…" on a new line

```

Example:

```markdown

> [!warning] Description

>

> # My Project

>

> This is a great project that does many things. It has multiple features:

>

> - Feature 1

> - Feature 2

> - Feature 3

> - Feature 4 …continues…

```

2. **Release badge** – image linking to `<web_url>/-/releases`. // ...

existing code ...Let me run markdownlint as suggested in the task hints to ensure our changes meet the markdown style guidelines.

I’ve completed both tasks:

- Task 1.1: Updated the ordering rule in section 4 to specify that projects appear alphabetically by their name in the detailed part, replacing the previous rule about maintaining summary table order.

- Task 1.2: Added documentation about the Description call-out truncation rules after section 3.1, including:

- The three required steps (strip YAML front-matter, render first 10 non-empty lines, append “…continues…”)

- A clear example showing how the truncation should look

The markdownlint output shows some line length issues and a heading issue, but these appear to be pre-existing in the file and not related to our changes. The changes we made maintain the existing formatting style of the document.

Would you like me to address any of the markdownlint issues, or would you prefer to keep the changes focused on just implementing the two tasks?

And within 1 Minute everything was updated as expected and verified within a few seconds. Here you can really see the advantages of this approach. Instead of going off-track and focusing i.e. on fixing the markdownlint-issues4 the agent notes that this is still an issue, but offers to keep focused on the tasks for the moment. With a simple ‘go’ i can continue tackle the next thing. 5 Minutes later everything is done and we have one less issue.

4 As i have often expirienced with LLMs trying to be too perfect and pleasing the user without ever stopping

Result

In the fast-evolving field of AI-assisted software development, my experience with Cursor’s specification-driven workflow has yielded tangible outcomes:

- Code Quality: By enforcing strict compliance checks and structured task creation, redundant code generation was reduced. The LLM still gets excited to “help”, but now it’s actually contributing meaningful changes instead of reinventing the wheel.

- Development Efficiency: The PRD-first approach has cut try&error time significantly. Before, getting the things i wanted was hit&miss. Now i “read the agents mind” and correct it during specification-creation.

- Project Integrity: Through rigorous spec adherence and systematic task breakdown, the codebase is more consistent, much better documented and decently tested5.

- Reduced Cognitive Load: The Cursor rule system has made it easier to focus on actual development tasks instead of wrestling with the AI’s sometimes questionable assumptions - or just installing a third different testing framework for exactly this module.

5 You know.. noone likes writing tests - and the person writing the code should never write the tests anyway. If you haven’t thought of something while coding, chanches are, that you miss that edge-case during testing as well.

In this gitlab_overviewer case study, i tried to show at an easy example, that this method works and can yields great outcomes. Even small discrepancies in the codebase tend to pop up during spec-reviews (which can be automated!). Next up would be running those in some kind of CI-job and integrating tools like issue-tracking into the agent instead of simple markdown-files in the repository as makeshift issue-tracker. But not by me for the foreseeable future, so if you are looking for a project, feel free!

All in all this isn’t a silver bullet for all AI-assisted development problems, but it’s made my coding experience with Cursor much more productive and predictable. It turns out treating an AI as a slightly overeager junior developer who needs clear instructions works better than hoping it’ll just “do the right thing”.

References

Citation

@online{dresselhaus2025,

author = {Dresselhaus, Nicole},

title = {Field {Report:} {Coding} in the {Age} of {AI} with {Cursor}},

date = {2025-06-26},

url = {https://drezil.de/Writing/coding-age-ai.html},

doi = {10.5281/zenodo.16633122},

langid = {en},

abstract = {This report presents practical methodologies and best

practices for developing software using Cursor, an AI-assisted IDE.

The paper details a structured workflow that emphasizes

specification-driven development, comprehensive documentation

practices, and systematic task management to maintain quality

control when working with language models. Through detailed examples

and rule configurations, it demonstrates how to leverage AI

capabilities while mitigating common pitfalls such as redundant code

generation and context limitations. The methodology presented

includes comprehensive PRD (Product Requirement Document) creation,

specification adherence checks, and task breakdown systems that

ensure alignment between human intent and AI execution. This guide

serves as a practical reference for developers seeking to

effectively integrate AI tools into their software development

workflow while maintaining project integrity and scalability.}

}